What ChatGPT and DeepMind Tell Us About AI

February 20, 2023

What's interesting is the really hard problem AI has not been applied to is how to manage these technologies in

our socio-economic-cultural system.

The world is agog at the apparent power of ChatGPT and similar programs to compose human-level narratives and generate

images from simple commands.

Many are succumbing to the temptation to extrapolate these powers to near-infinity, i.e. the Singularity in which AI

reaches super-intelligence Nirvana.

All the excitement is fun but it's more sensible to start by placing ChatGPT in the context of AI history and our

socio-economic system.

I became interested in AI in the early 1980s, and read numerous books by the leading AI researchers of the time.

AI began in the 1960s with the dream of a Universal General Intelligence, a computational machine that matched humanity's

ability to apply a generalized intelligence to any problem.

This quickly led to the daunting realization that human intelligence wasn't just logic or reason; it was an immensely

complex system that depended on sight, heuristics (rules of thumb), feedback and many other subsystems.

AI famously goes through cycles of excitement about advances that are followed by deflating troughs of realizing the limits

of the advances.

The increase in computing power and software programming in the 1980s led to advances in these sub-fields: machine vision,

algorithms that embodied heuristics, and so on.

At the same time, philosophers like Hubert Dreyfus and John Searle were exploring what we mean by knowing and understanding,

and questioning whether computers could ever achieve what we call "understanding."

This paper (among many) summarizes the critique of AI being able to duplicate human understanding:

Intentionality and

Background: Searle and Dreyfus against Classical AI Theory.

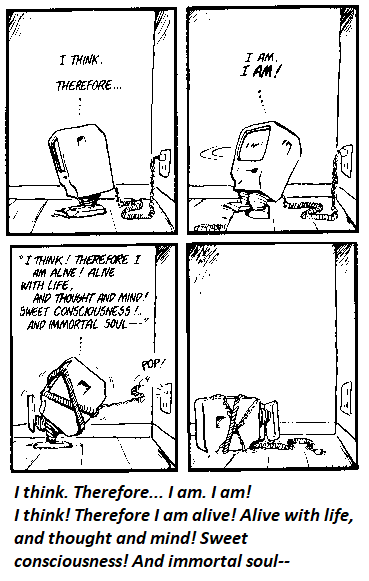

Simply put, was running a script / algorithm actually "understanding" the problem as humans understand the problem?

The answer is of course no. The Turing Test--programming a computer to mimic human language and responses--can be

scripted / programmed, but that doesn't mean the computer has human understanding. It's just distilling human responses

into heuristics that mimic human responses.

One result of this discussion of consciousness and understanding was for AI to move away from the dream of

General Intelligence to the specifics of machine learning.

In other words, never mind trying to make AI mimic human understanding, let's just enable it to solve complex problems.

The basic idea in machine learning is to distill the constraints and rules of a system into algorithms, and then enable

the program to apply these tools to real-world examples.

Given enough real-world examples, the system develops heuristics (rules of thumb) about what works and what doesn't

which are not necessarily visible to the human researchers.

In effect, the machine-learning program becomes a "black box" in which its advances are opaque to those who programmed

its tools and digitized real-world examples into forms the program could work with.

It's important to differentiate this machine learning from statistical analysis using statistical algorithms.

For example, if a program has been designed to look for patterns and statistically relevant correlations, it sorts

through millions of social-media profiles and purchasing histories and finds that Republican surfers who live in

(say) Delaware are likely to be fans of Chipotle.

This statistical analysis is called "big data" and while it has obvious applications for marketing everything from

candidates to burritos, it doesn't qualify as machine learning.

In a similar way, algorithms like ChatGPT that generate natural-language narratives from databases and heuristics

do not qualify as machine learning unless they fashion advances within a "black box" in which the input (the request)

is known and the output is known, but the process is unknown.

Google has an AI team called DeepMind that tackled the immensely complex task of figuring out how proteins constructed

of thousands of amino acid sequences fold up into compact shapes within nanoseconds.

The problem of computing all the possible folds in 200 million different proteins cannot be solved by mere brute-force

calculation of all permutations, and so it required breaking down each step of the process into algorithms.

The eventual product, AlphaFold, has 32 component algorithms, each of which encapsulates different knowledge bases

from the relevant disciplines (biochemistry, physics, etc.).

DeepMind's AI Makes Gigantic Leap in Solving Protein Structures.

This is how project leader Demis Hassabis describes the "black box" capabilities:

"It's clear that AlphaFold 2 is learning something implicit about the structure of chemistry and physics. It sort of

knows what things might be plausible.

I think AlphaFold has captured something quite deep about the physics and the chemistry of molecules... it's almost

learning about it in an intuitive sense."

But there are limits on what AlphaFold can do and what it's good at: "I think we'll have more and more researchers

looking at protein areas that AlphaFold is not good at predicting."

In other words, AlphaFold can't be said to "understand" the entirety of protein folding. It's good at limiting the

possible folds to a subset and presenting those possibilities in a form that can be compared to actual protein

structures identified by lab processes. It can also assign a confidence level to each of its predictions.

This is useful but far from "understanding," and it is a disservice to claim otherwise.

What's interesting is the really hard problem AI has not been applied to is how to manage these technologies in

our socio-economic-cultural system.

This essay was first published as a weekly Musings Report sent exclusively to subscribers and

patrons at the $5/month ($50/year) and higher level. Thank you, patrons and subscribers, for

supporting my work and free website.

New Podcast:

Turmoil Ahead As We Enter The New Era Of 'Scarcity' (53 min)

My new book is now available at a 10% discount ($8.95 ebook, $18 print):

Self-Reliance in the 21st Century.

My new book is now available at a 10% discount ($8.95 ebook, $18 print):

Self-Reliance in the 21st Century.

Read the first chapter for free (PDF)

Read excerpts of all three chapters

Podcast with Richard Bonugli: Self Reliance in the 21st Century (43 min)

My recent books:

The Asian Heroine Who Seduced Me

(Novel) print $10.95,

Kindle $6.95

Read an excerpt for free (PDF)

When You Can't Go On: Burnout, Reckoning and Renewal

$18 print, $8.95 Kindle ebook;

audiobook

Read the first section for free (PDF)

Global Crisis, National Renewal: A (Revolutionary) Grand Strategy for the United States

(Kindle $9.95, print $24, audiobook)

Read Chapter One for free (PDF).

A Hacker's Teleology: Sharing the Wealth of Our Shrinking Planet

(Kindle $8.95, print $20,

audiobook $17.46)

Read the first section for free (PDF).

Will You Be Richer or Poorer?: Profit, Power, and AI in a Traumatized World

(Kindle $5, print $10, audiobook)

Read the first section for free (PDF).

The Adventures of the Consulting Philosopher: The Disappearance of Drake (Novel)

$4.95 Kindle, $10.95 print);

read the first chapters

for free (PDF)

Money and Work Unchained $6.95 Kindle, $15 print)

Read the first section for free

Become

a $1/month patron of my work via patreon.com.

NOTE: Contributions/subscriptions are acknowledged in the order received. Your name and email remain confidential and will not be given to any other individual, company or agency.

|

Thank you, William C. ($50), for your magnificently generous contribution to this site -- I am greatly honored by your steadfast support and readership. |

Thank you, Ralph L. ($3/month), for your most generous pledge to this site -- I am greatly honored by your support and readership. |

|